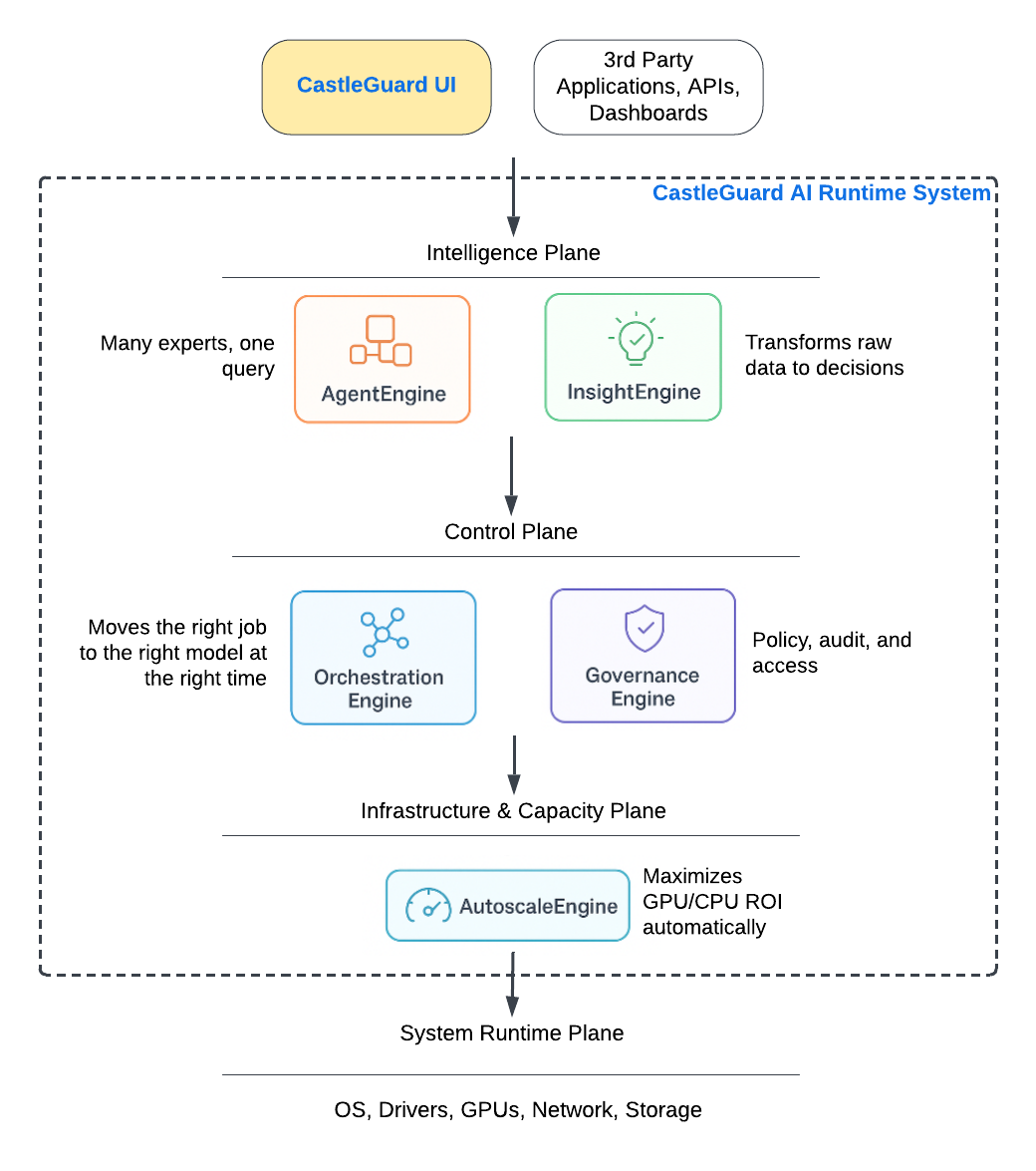

CastleGuard AI Under the Hood

CastleGuard Runtime is the execution layer that powers AI inside air-gapped and controlled environments.

Unlike typical chatbot platforms, CastleGuard is a runtime environment engineered from the ground up to operate in classified, air-gapped, and edge environments. It abstracts AI complexity while giving you full control over your models, data, and infrastructure — no Internet, no cloud dependency, and no compromise on sovereignty.

-

Model flexibility – run any open-source or proprietary model

-

High-performance – optimized for GPU acceleration & multi-node scaling

-

Secure governance – full RBAC, LDAP integration, and audit logs

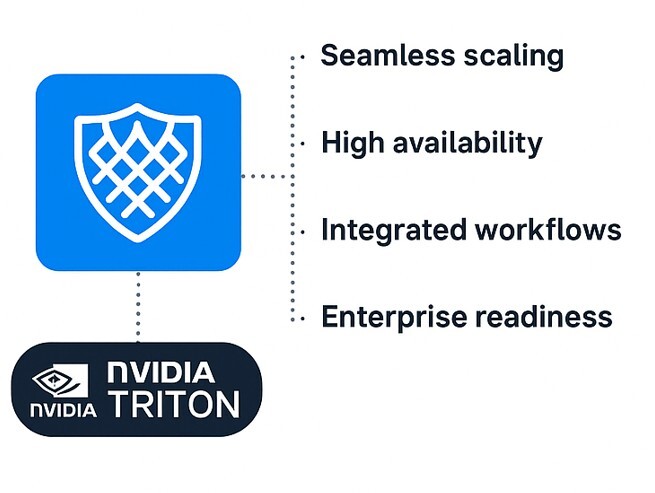

CastleGuard extends the capabilities of NVIDIA Triton Inference Server with our open-source initiative, the Citadel Triton AI Runtime System. While Triton provides a strong foundation for AI model serving, it is not designed to address the unique challenges of secure, enterprise, and air-gapped environments.

Citadel Triton Mesh adds:

- Seamless scaling – multi-node, multi-GPU, and cross-OS inference.

- High availability – dynamic load balancing, failover handling, and resilience under real-world latency.

- Integrated workflows – fine-tuning and training alongside inference.

- Enterprise readiness – deployment in air-gapped, disconnected, and classified environments.

Unified runtime architecture

Five Engines. One Mission-Ready Platform.

CastleGuard’s architecture brings together five tightly integrated modules, each built for mission resilience and adaptability:

Manages and executes AI “agents” — specialized model-driven services like translation, transcription, or intelligence fusion. It can route a request to the right agent, dynamically combine outputs from multiple agents, and adapt workflows on the fly based on context or mission needs.

Transforms raw, multi-source data into actionable intelligence. Combines structured and unstructured data, applies retrieval-augmented generation, and uses domain-aware models to deliver accurate, context-rich outputs that support mission-critical decision-making.

Coordinates the flow of data and workloads between models, services, and infrastructure components. It manages task scheduling, dependency resolution, and pipeline execution so that multiple AI workloads can run smoothly in parallel, even across heterogeneous hardware and operating systems.

Provides built-in policy enforcement, auditing, and access control across all AI operations. Integrates with authentication systems (LDAP, RBAC) to ensure only authorized users can run or modify models. Tracks usage, produces compliance reports, and logs all activity to meet defence and government security standards.

Automatically adjusts compute resources in real time to match workload demands. Monitors GPU and CPU usage across your infrastructure, scaling tasks up during peak demand and down during idle periods to maximize efficiency and ROI. Designed for air-gapped and hybrid environments, it ensures uninterrupted performance without over-provisioning.

One Runtime. One Mission.

The CastleGuard AI Runtime isn’t just a collection of components — it’s a fully integrated, defence-grade platform that abstracts the complexity of AI deployment, scaling, orchestration, and governance.

Supported Language Models (LLMs & Sovereign SLMs)

CastleGuard supports any modern Large Language Model (LLM) architecture and also ships with Nextria’s sovereign Small Language Models (SLMs). Traditional LLMs deliver state-of-the-art generative performance but require larger GPU footprints. SLMs are compact, bilingual, domain-adaptable models designed for secure, air-gapped, edge, and resource-constrained deployments, and can be fine-tuned entirely inside closed networks.

| Model | VRAM Requirement | Recommended GPU(s) | Can Software Share GPU? | Use Case Fit | Notes |

|---|---|---|---|---|---|

| LLaMA 3.3 – 70B | ≥48 GB | RTX A6000 (48GB) or H100/B100 (80GB) | No — dedicated GPU required | High-accuracy Q&A, complex reasoning, multilingual generation | Best for enterprise or departmental clusters |

| LLaMA 3.1 – 8B | ≥24 GB | RTX 4090/5090 (24GB), A6000, L40S, H100/B100 | Yes | General chat, translation, coding, summarization | Great for constrained power/cost/footprint |

| Mistral 24B | ≥48 GB | A6000, L40S, H100/B100 | Yes | Fast inference, balanced Q&A + summarization | Strong mid-tier departmental option |

| CastleGuard SLM (Canadian Sovereign SLM) | 2–3 GB minimum | Runs efficiently on 4090/5090, A6000, L40S, H100/B100 | Yes | Translation, Canadian French, fine-tuning, domain expert models | Ultra-light; ideal for tactical edge & GoC language patterns |

| Deployment Tier | Example Hardware | Expected Concurrency | Notes |

|---|---|---|---|

| Enterprise Cluster | Multi-GPU H100 or B100 nodes | ~60–100+ users | Full-scale agency/department rollouts |

| Departmental Tier | 4× A100 or 4× L40S | ~25–40 users | Shared service or departmental deployments |

| Workgroup | 2× A6000 or 2× L40S | 5–10 users | Secure labs, program teams, research cells |

| AI-at-the-Edge | RTX 4090/5090 or A6000 | 1 user | Portable, disconnected, air-gapped edge use |

Benchmarking Excellence: Proven Performance

CastleGuard doesn’t just match the world’s leading AI models — in critical domains, we outperform them. Our benchmarks prove that mission-ready AI can deliver state-of-the-art results and operate fully on-premises with unlimited usage, domain awareness, and security you control.

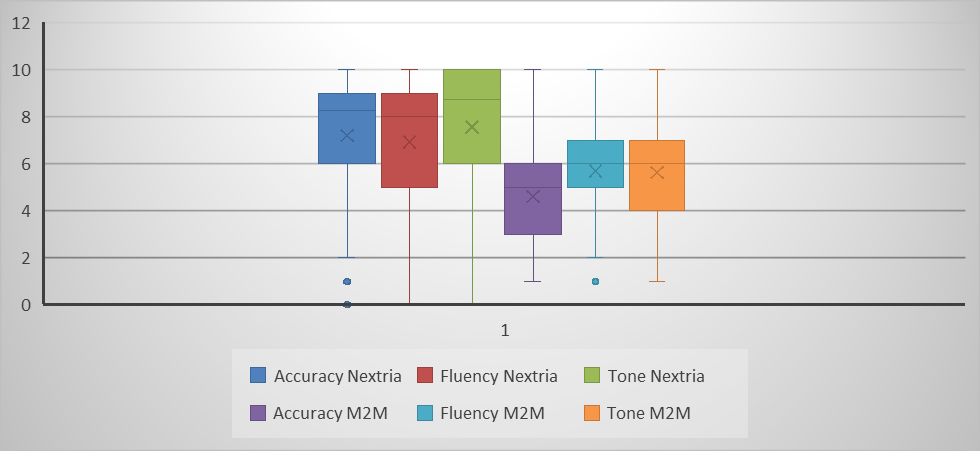

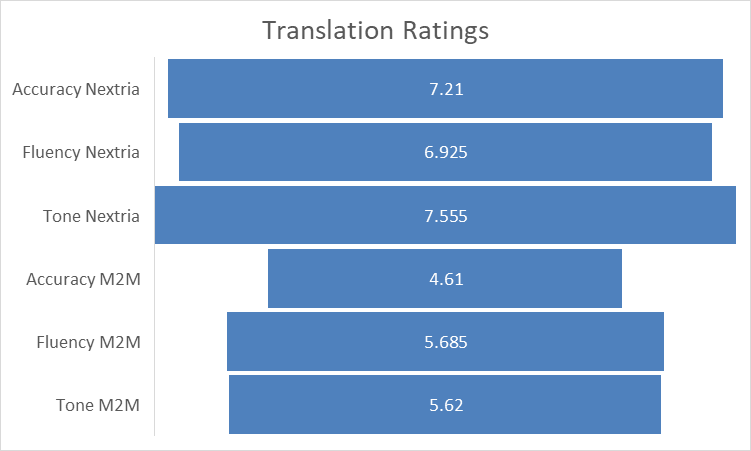

Translation Accuracy + On-Prem Advantage

CastleGuard Translation matches or exceeds the accuracy of Google Translate, Facebook M2M, and DeepL — and goes beyond with capabilities designed for secure, domain-specific deployments:

-

On-premise or air-gapped deployment – operate in fully disconnected environments with no data ever leaving your infrastructure with unlimited translations.

-

Domain-specific intelligence – optimized for defence, legal, and government contexts where precision and compliance matter.

-

Acronym-aware outputs – understands and correctly expands or preserves sector-specific abbreviations.

-

Hardware flexibility – deploy on anything from laptops to GPU clusters without vendor lock-in.

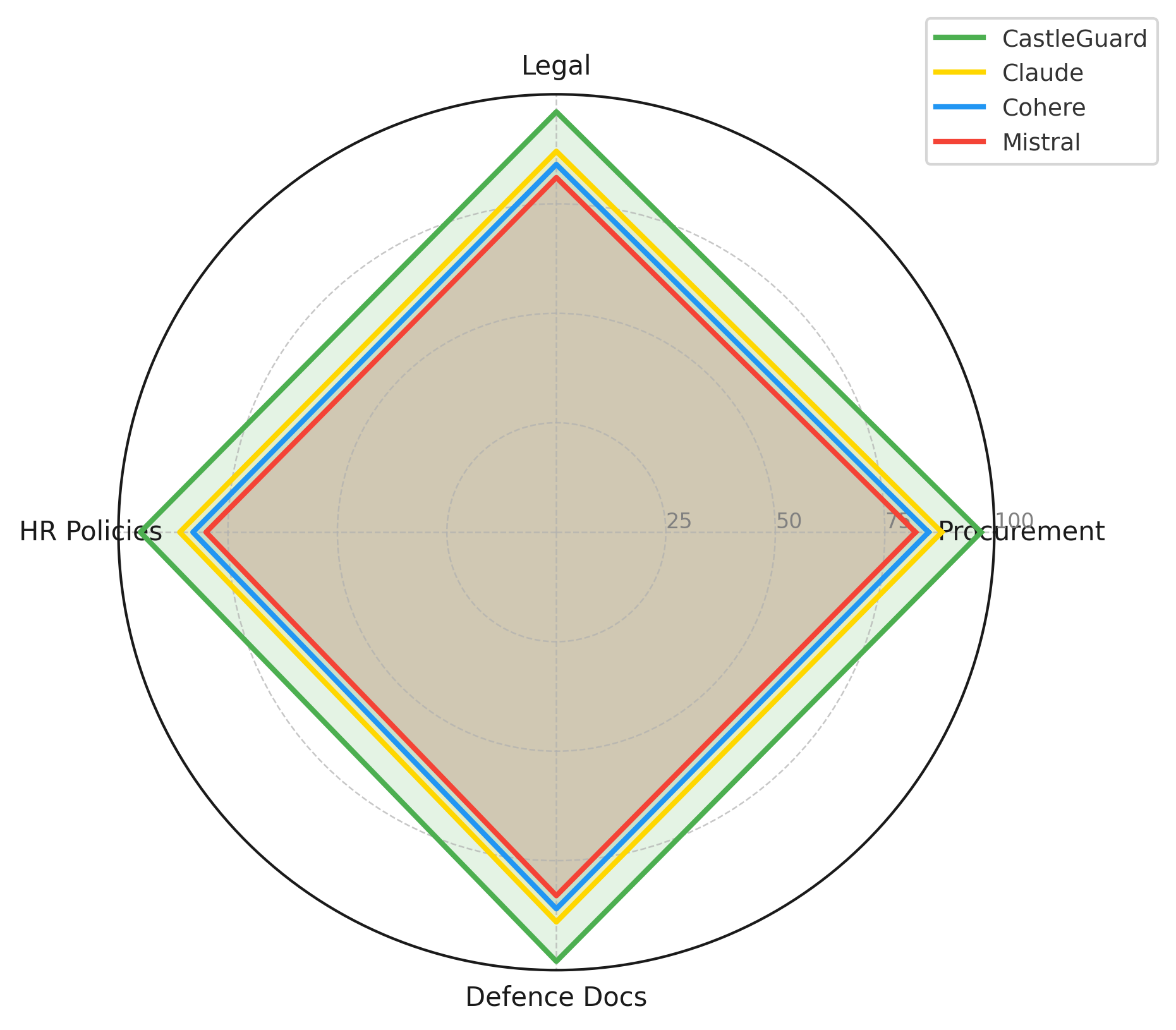

Domain-Specific LLM Accuracy

In legal, procurement, defence documentation, and HR policies, CastleGuard outperforms Claude, Cohere, and Mistral. Our domain-trained models deliver:

-

Higher factual accuracy – consistently returns correct, verifiable answers for regulated and compliance-heavy sectors.

-

Fewer hallucinations – rigorous training on curated, domain-specific datasets minimizes fabricated content.

-

Terminology precision – built-in awareness of sector-specific terms, acronyms, and phrases ensures alignment with internal language standards.

As shown in the chart , CastleGuard consistently scores at the top across all four domains — procurement, legal, HR policies, and defence documents — ensuring dependable performance where accuracy matters most.

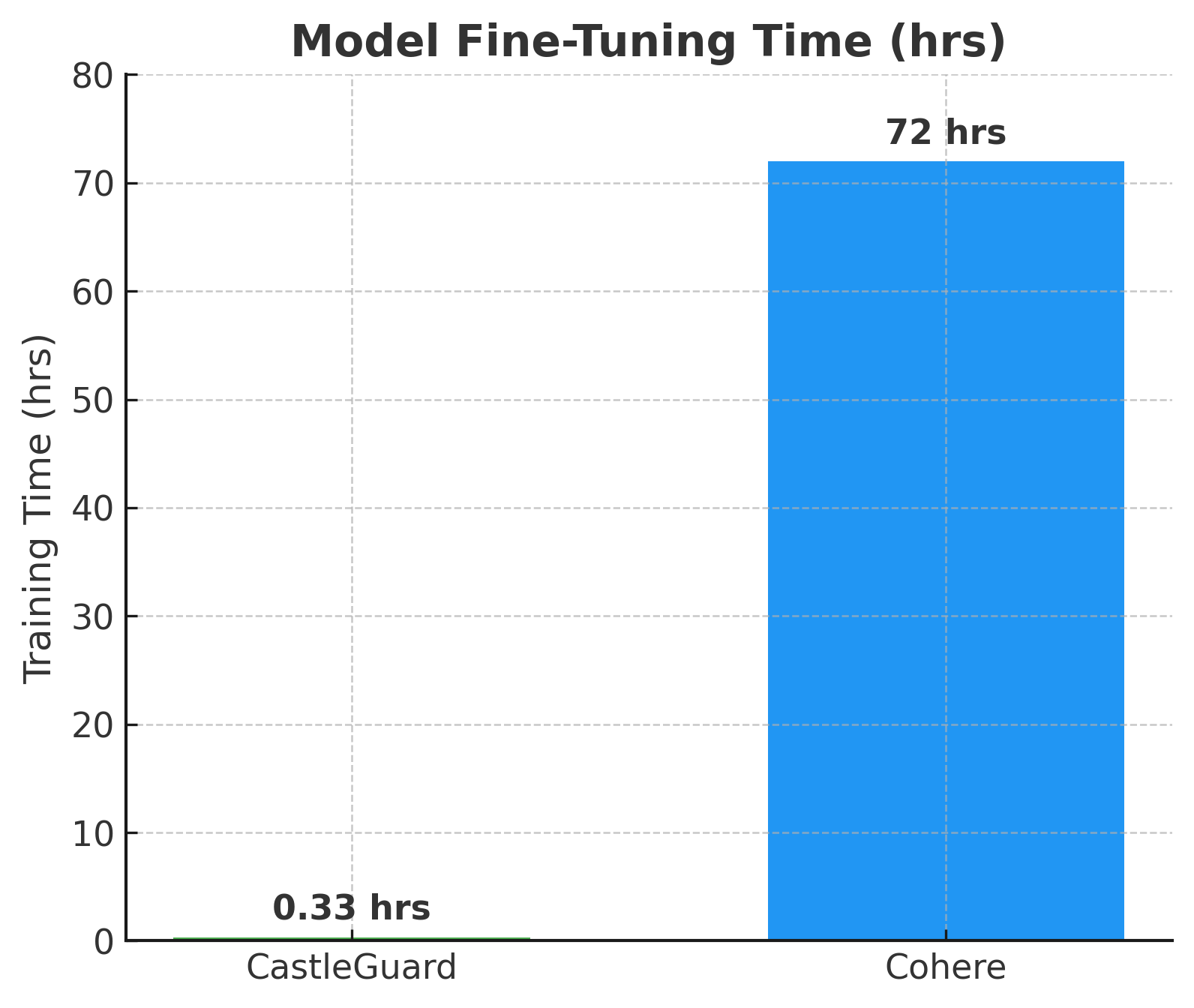

Faster Fine-Tuning, Faster ROI

Fine-tuning a CastleGuard model takes just 0.33 hours (~20 minutes) versus 72 hours for a Cohere model using the same GPU capacity. This speed advantage translates into:

-

Rapid deployment cycles – launch AI capabilities in a single afternoon instead of waiting multiple days for fine-tuning to complete.

-

Lower compute costs – spend 218× fewer GPU hours per tuning cycle, freeing capacity for other mission-critical workloads.

-

Scalable for large on-prem workloads – for 10,000 internal documents, CastleGuard can produce a tuned, domain-aware model in hours rather than weeks, even in air-gapped environments.

In the chart , CastleGuard’s massive efficiency gain (0.33 hrs vs 72 hrs) is shown as 21,800% greater training efficiency — the higher the bar, the faster the ROI.

From Research to Reality

CastleGuard AI is built on years of applied AI research in partnership with Canada’s national defence science teams. Every breakthrough from this work has been implemented and improved in our runtime environment — turning theoretical advances into deployable, operational features.

Research-driven capabilities now in CastleGuard include:

-

Domain-aware multilingual translation with rapid acronym updates — proven in both French-Canadian and the world’s first Arabic defence-focused model adaptable to local dialects.

-

Advanced document understanding using custom OCR, semantic segmentation, and badge recognition for accurate parsing of complex files.

-

Extended-context reasoning and precision meeting summarization built into our InsightEngine.

These innovations, combined with our multi-OS orchestration, real-time expert fusion, and one-prompt fine-tuning, make CastleGuard a secure, sovereign AI platform that bridges cutting-edge research and mission-ready performance.

CastleGuard vs. the Rest

CastleGuard brings together features that other platforms offer separately — if at all.

| Capability | CastleGuard AI | Others (e.g. Cohere, H2O.ai, AskSage) | User Benefit |

|---|---|---|---|

| Multi-OS Node Cluster Support | ✅ | ❌ | Deploys on Windows and Linux with your current infrastructure—no new hardware needed. |

| Unified Installer | ✅ | ❌ | A single installer means faster setup with less complexity. |

| On-Prem Any↔Any Translation | ✅ | ❌ | Translate documents safely on-site—your data never leaves your network. |

| Live Expert Fusion | ✅ | ❌ | Get more accurate answers by combining insights from multiple expert models. |

| Smart AI Mesh Scaling | ✅ | ❌ | Scales models automatically for faster responses and efficient GPU use. |

| One-Prompt Domain Fine-Tuning | ✅ | ⚠️ (complex or cloud-based) | Build custom AI expert models from your documents in hours—no ML team required. |

| Acronym Injection | ✅ | ❌ | Quickly add terms so AI uses your organization’s language right away. |

| Expert Switching | ✅ | ❌ | Get answers that adjust to multiple topics—even within the same response. |

| LDAP / Identity Integration | ✅ | ❌ | Easily integrates into your current user directory for secure access control. |

| Role-Based Access Control (RBAC) | ✅ | ❌ | Limit access to models and features by team, project, or role. |

| Governance, Usage & Audit Reports | ✅ | ❌ | Full visibility and traceability over AI use to meet regulatory and security needs. |